List of videos

Francisco Fernández Castaño - Extending Python, what is the best option for me?

Francisco Fernández Castaño - Extending Python, what is the best option for me? [EuroPython 2014] [22 July 2014] Python is a great language, but there are occasions where we need access to low level operations or connect with some database driver written in C. With the FFI(Foreign function interface) we can connect Python with other languages like C, C++ and even the new Rust. There are some alternatives to achieve this goal, Native Extensions, Ctypes and CFFI. I'll compare this three ways of extending Python. ----- In this talk we will explore all the alternatives in cpython ecosystem to load external libraries. In first place we'll study the principles and how shared libraries work. After that we will look into the internals of CPython to understand how extensions work and how modules are loaded. Then we will study the main three alternatives to extend CPython: Native Extensions, Ctypes and CFFI and how to automate the process. Furthermore we will take a look to other python implementations and how we can extend it.

Watch

Benoit Chesneau - Concurrent programming with Python and my little experiment

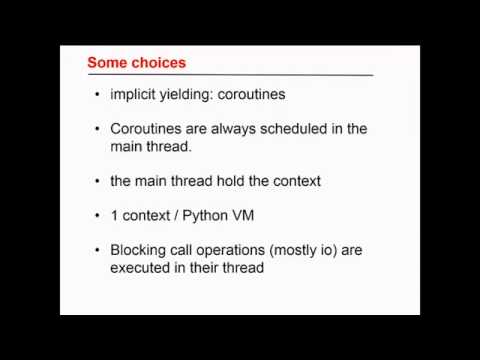

Benoit Chesneau - Concurrent programming with Python and my little experiment [EuroPython 2014] [25 July 2014] Concurrent programming in Python may be hard. A lot of solutions exists though. Most of them are based on an eventloop. This talk will present what I discovered and tested along the time and my little experiment in porting the Go concurrency model in Python. ----- Concurrent programming in Python may be hard. A lot of solutions exists though. Most of them are based on an eventloop. This talk will present what I discovered and tested along the time with code examples, from asyncore to asyncio, passing by gevent, eventlet, twisted and some new alternatives like evergreen or gruvi. It will also present my little experiment in porting the Go concurrency model in Python named [offset](http://github.com/benoitc/offset), how it progressed in 1 year and how it became a fully usable library . This presentation will be an update of the presentation I gave at the FOSDEM 2014. It will introduce to the concurrency concepts and how they are implemented in the different libraries.

Watch

Yves - Performance Python for Numerical Algorithms

Yves - Performance Python for Numerical Algorithms [EuroPython 2014] [23 July 2014] This talk is about several approaches to implement high performing numerical algorithms and applications in Python. It introduces into approaches like vectorization, multi-threading, parallelization (CPU/GPU), dynamic compiling, high throughput IO operations. The approach is a practical one in that every approach is illustrated by specific Python examples. ----- This talk is about several approaches to implement high performing numerical algorithms and applications in Python. It introduces into approaches like multi-threading, parallelization (CPU/GPU), dynamic compiling, high throughput IO operations. The approach is a practical one in that every approach is illustrated by specific Python examples. The talk uses, among others, the following libraries: * NumPy * numexpr * IPython.Parallel * Numba * NumbaPro * PyTables

Watch

Tarashish Mishra - Building Realtime Web Applications with WebRTC and Python

Tarashish Mishra - Building Realtime Web Applications with WebRTC and Python [EuroPython 2014] [25 July 2014] WebRTC makes building peer to peer real time web applications easier. First, we'll discuss in short what WebRTC is, how it works. Then we will explore ways to build the signalling system of a WebRTC app using Python. ----- Introduction =========== This talk will first introduce the audience to WebRTC and then discuss about how to implement the server side logic of a WebRTC app using Python. WebRTC is a free, open project that enables web browsers with plugin-less Real-Time Communications (RTC) capabilities via simple JavaScript APIs. What makes WebRTC special is that the data travels from one client to another without going through the server. The main functions of WebRTC can be broadly categorized into three types. - Access and acquire video and audio streams - Establish a connection between peers and stream audio/video. - Communicate arbitrary data. WebRTC uses three different JavaScript APIs to perform these three functions. These APIs are: - MediaStream (aka getUserMedia) - RTCPeerConnection - RTCDataChannel MediaStream API performs the task of accessing the webcam and/or microphone of the device and acquire the video and/or audio stream from them. RTCPeerConnection API establishes connection between peers and streams audio and video data. This API also does all the encoding and decoding of audio/video data. The third API, RTCDataChannel helps to communicate arbitrary data from one client to the other. There will be short demos to demonstrate the functionalities of these APIs. Signaling and Session Control ======================== WebRTC uses RTCPeerConnection to communicate streaming data between browsers, but some sort of mechanism is needed to coordinate this communication and to send control messages. This process is known as signaling. Signaling is used to exchange three types of information. - Session control messages: to initialize or close communication and report errors. - Network configuration: to the outside world, what's my computer's IP address and port? - Media capabilities: what codecs and resolutions can be handled by my browser and the browser it wants to communicate with? This can be implemented using any appropriate two way communication channel. Implementing signaling in Python ========================== Next, we will have a look at how to implement this signaling mechanism in Python. ( Demonstration with annotated code and live application.) ### Google AppEngine and the Channel API ### Google AppEngine has a channel API which offers persistent connections between your application and Google servers, allowing your application to send messages to JavaScript clients in real time without the use of polling. We'll use this Channel API to build the signaling system of our WebRTC app on top of webapp2 and flask framework. ### Flask and gevent ### We'll implement the same signaling system again, this time on top of Flask using gevent for the persistent connection between the browser and our application. Outline of the talk =============== ### Intro (5 min) ### - Who are we? - What is WebRTC? - Functions of WebRTC. ### WebRTC APIs and Demos (3 min) ### - MediaStream (getUserMedia) API - RTCPeerConnection API - RTCDataChannel API ### Signaling in WebRTC Applications (3 min) ### - What is signaling? - Why is it needed? - How to implement it? ### Implementation of signaling (16 min) ### - Implementation using Google AppEngine and Channel API - Implementation using Flask and gevent ### Questions (3 min) ###

Watch

Florian Wilhelm - Extending Scikit-Learn with your own Regressor

Florian Wilhelm - Extending Scikit-Learn with your own Regressor [EuroPython 2014] [25 July 2014] We show how to write your own robust linear estimator within the Scikit-Learn framework using as an example the Theil-Sen estimator known as "the most popular nonparametric technique for estimating a linear trend". ----- Scikit-Learn (http://scikit-learn.org/) is a well-known and popular framework for machine learning that is used by Data Scientists all over the world. We show in a practical way how you can add your own estimator following the interfaces of Scikit-Learn. First we give a small introduction to the design of Scikit-Learn and its inner workings. Then we show how easily Scikit-Learn can be extended by creating an own estimator. In order to demonstrate this, we extend Scikit-Learn by the popular and robust Theil-Sen Estimator (http://en.wikipedia.org/wiki/Theil%E2%80%93Sen_estimator) that is currently not in Scikit-Learn. We also motivate this estimator by outlining some of its superior properties compared to the ordinary least squares method (LinearRegression in Scikit-Learn).

Watch

Jim Baker - Scalable Realtime Architectures in Python

Jim Baker - Scalable Realtime Architectures in Python [EuroPython 2014] [25 July 2014] This talk will focus on you can readily implement highly scalable and fault tolerant realtime architectures, such as dashboards, using Python and tools like Storm, Kafka, and ZooKeeper. We will focus on two related aspects: composing reliable systems using at-least-once and idempotence semantics and how to partition for locality. ----- Increasingly we are interested in implementing highly scalable and fault tolerant realtime architectures such as the following: * Realtime aggregation. This is the realtime analogue of working with batched map-reduce in systems like Hadoop. * Realtime dashboards. Continuously updated views on all your customers, systems, and the like, without breaking a sweat. * Realtime decision making. Given a set of input streams, policy on what you like to do, and models learned by machine learning, optimize a business process. One example includes autoscaling a set of servers. (We use realtime in the soft sense: systems that are continuously computing on input streams of data and make a best effort to keep up; it certainly does not imply hard realtime systems that strictly bound their computation times.) Obvious tooling for such implementations include Storm (for event processing), Kafka (for queueing), and ZooKeeper (for tracking and configuration). Such components, written respectively in Clojure (Storm), Scala (Kafka), and Java (ZooKeeper), provide the desired scalability and reliability. But what may not be so obvious at first glance is that we can work with other languages, including Python, for the application level of such architectures. (If so inclined, you can also try reimplementing such components in Python, but why not use something that's been proven to be robust?) In fact Python is likely a better language for the app level, given that it is concise, high level, dynamically typed, and has great libraries. Not to mention fun to write code in! This is especially true when we consider the types of tasks we need to write: they are very much like the data transformations and analyses we would have written of say a standard Unix pipeline. And no one is going to argue that writing such a filter in say Java is fun, concise, or even considerably faster in running time. So let's look at how you might solve such larger problems. Given that it was straightforward to solve a small problem, we might approach as follows. Simply divide up larger problems in small one. For example, perhaps work with one customer at a time. And if failure is an ever present reality, then simply ensure your code retries, just like you might have re-run your pipeline against some input files. Unfortunately both require distributed coordination at scale. And distributed coordination is challenging, especially for real systems, that will break at scale. Just putting a box in your architecture labeled **"ZooKeeper"** doesn't magically solve things, even if ZooKeeper can be a very helpful part of an actual solution. Enter the Storm framework. While Storm certainly doesn't solve all problems in this space, it can support many different types of realtime architectures and works well with Python. In particular, Storm solves two key problems for you. **Partitioning**. Storm lets you partition streams, so you can break down the size of your problem. But if the a node running your code fails, Storm will restart it. Storm also ensures such topology invariants as the number of nodes (spouts and bolts in Storm's lingo) that are running, making it very easy to recover from such failures. This is where the cleverness really begins. What can you do if you can ensure that **all the data** you need for a given continuously updated computation - what is the state of this customer's account? - can be put in **exactly one place**, then flow the supporting data through it over time? We will look at how you can readily use such locality in your own Python code. **Retries**. Storm tracks success and failure of events being processed efficiently through a batching scheme and other cleverness. Your code can then choose to retry as necessary. Although Storm also supports exactly-once event processing semantics, we will focus on the simpler model of at-least-once semantics. This means your code must tolerate retry, or in a word, is idempotent. But this is straightforward. We have often written code like the following: seen = set() for record in stream: k = uniquifier(record) if k not in seen: seen.add(k) process(record) Except of course that any such real usage has to ensure it doesn't attempt to store all observations (first, download the Internet! ;), but removes them by implementing some sort of window or uses data structures like HyperLogLog, as we will discuss.

Watch

adam - How Disqus is using Django as the basis of our Service Oriented Architecture

adam - How Disqus is using Django as the basis of our Service Oriented Architecture [EuroPython 2014] [24 July 2014] Disqus maintains the largest Django app out there. And we love it! It has, however, grown rather large and unwieldy. In the last year Disqus has had an increasing number of smaller services cropping up based on several different platforms. So this talk will be about how we do continuous deployment with our emerging service-based infrastructure.

Watch

Valerio Maggio - Traversing Mazes the pythonic way and other Algorithmic Adventures

Valerio Maggio - Traversing Mazes the pythonic way and other Algorithmic Adventures [EuroPython 2014] [22 July 2014] Graphs define a powerful mental (and mathematical) model of structure, representing the building blocks of formulations and/or solutions for many hard problems. In this talk, graphs and (some of the) main graph-related algorithms will be analysed from a very pythonic angle: the Zen of Python (e.g., beautiful is better than ugly, simple is better than complex, readability counts).

Watch

R. Collins TDM Test Driven Madness

[EuroPython 2013] R. Collins TDM Test Driven Madness 02 July 2013 Track Ravioli

Watch