EuroPython 2014

2014

List of videos

Using python, LXC and linux to create a mass VM hosting, managed by django and angularjs

Daniel Kraft/Oliver Roch - Using python, LXC and linux to create a mass VM hosting, managed by django and angularjs [EuroPython 2014] [22 July 2014] How we created a scalable mass VM hosting for open source web apps with python, LXC and linux with a web-UI based on django and angularjs. We'll show the underlying architecture of this service, several linux internals that make this possible and we'll talk about bitter failure during development. This talk will be python- and linux-centric with some hints for integrating angularjs into django.

Watch

Schlomo Shapiro - Sponsoring Open Source

Schlomo Shapiro - Sponsoring Open Source [EuroPython 2014] und damit den Chef überzeugen

Watch

Erik Janssens - SQLAlchemy Drill

Erik Janssens - SQLAlchemy Drill [EuroPython 2014] [23 July 2014] If you have been looking to use SQLAlchemy in one of your projects, but found the documentation a bit overwhelming then this talk is for you. If you have used SQLAlchemy but feel there are some holes in your knowledge of the library, then this talk is for you as well. The idea is that during this talk you bring your laptop with you, and make sure you have SQLAlchemy installed. At the beginning of the talk, we fire up our Python interpreter and start to explore the library in a structured way. In the next 25 minutes, we'll go hands on through the various parts of the SQLAlchemy. We try out the concepts of each part of the library and make sure the basics are well understood. ----- In this talk will introduce the audience to SQLAlchemy in a well structured way, so that basic concepts are understood. This talk will be a combination of slides and interactive code editing in IPython. Both the working of SQLAlchemy as well as best practices in using SQLAlchemy will be demonstrated. I will demonstrate the basic workings of: * the SQL generation layer * the DDL generation * the ORM * the session * transactions The used code will allow those who have their laptop with them to try the code samples for themselves.

Watch

Thomas Aglassinger - Solution oriented error handling

Thomas Aglassinger - Solution oriented error handling [EuroPython 2014] [22 July 2014] This talk shows how to use Python's built in error handling mechanisms to keep the productive code clean, derive error messages helpful for the user directly from the code and release ressources properly. ----- Traditionally error handling is regarded an annoyance by developers because it removes the focus from the already difficult enough productive parts of the code to parts that ideally will never be called. And even if, end users seem to be ignore the error messages and just click "Ok" or call the help desk. Solution oriented error handling uses Python's existing try/catch/finally idiom, with statement, assert statement and exception hierarchy in a way that keeps the code clean and easy to maintain. It gives a clear distinction between errors that can be solved by the end user, the system administrator and the developer. Naming conventions and a simple set of coding guidelines ensure that helpful error messages can be easily derived from the code. Most code examples work with Python 2.6+ and Python 3.x, on a few occasions minor differences are pointed out. Topics covered are: 1. Introduction to error handling in Python - What are errors? - How to represent errors in Python - Detecting errors - Delegating errors to the caller - clean resource management 2. Principles of solution oriented error handling - responsibilities between user, admin and developer - when to use raise or assert 3. Error messages - What are "good" error messages - How to derive error messages from the source code - Adding context to the error - How to report errors to the user 4. Solution oriented usage of Python's exception hierarchy - admins fix `EnvironmentError` - users fix `DataError` - representing `DataError` - converting exceptions to `DataError` - developers fix everything else - special Python exceptions not representing errors 5. Template for a solution oriented command line application 6. Best practices for `raise` and `except` - When to use `raise`? - When to use `except`? This talk is a translation of a German [talk](https://github.com/roskakori/talks/tree/master/pygraz/errorhandling) given at the PyGRAZ user group and in a (slightly depythonized variant) the Grazer Linux Tag 2013 ([slides and video](http://glt13-programm.linuxtage.at/events/198.de.html)).

Watch

holger krekel - packaging and testing with devpi and tox

holger krekel - packaging and testing with devpi and tox [EuroPython 2014] [24 July 2014] This talk discusses good ways to organise packaging and testing for Python projects. It walks through a per-company and an open source scenario and explains how to best use the "devpi-server" and "tox" for making sure you are delivering good and well tested and documented packages. As time permits, we also discuss in-development features such as real-time mirroring and search. ----- The talk discusses the following tools: - devpi-server for running an in-house or per-laptop python package server - inheritance between package indexes and from pypi.python.org public packages - the "devpi" client tool for uploading docs and running tests - running of tests through tox - summary view with two work flows: open source releases and in-house per-company developments - roadmap and in-development features of devpi and tox (The presenter is the main author of the tools in question).

Watch

Slavek Kabrda - Red Hat Loves Python

Slavek Kabrda - Red Hat Loves Python [EuroPython 2014] [24 July 2014] Come learn about what Red Hat is doing with Python and the Python community, and how you can benefit from these efforts. Whether it is the new Python versions in Red Hat Enterprise Linux via the new Red Hat Software Collections, compatible Python cartridges in OpenShift Platform-as-a-Service (PaaS), or being the leading contributor to OpenStack, there's a lot going on at Red Hat. We're Pythonistas, too!

Watch

Floris Bruynooghe - Advanced Uses of py.test Fixtures

Floris Bruynooghe - Advanced Uses of py.test Fixtures [EuroPython 2014] [23 July 2014] One unique and powerful feature of py.test is the dependency injection of test fixtures using function arguments. This talk aims to walk through py.test's fixture mechanism gradually introducing more complex uses and features. This should lead to an understanding of the power of the fixture system and how to build complex but easily-managed test suites using them. ----- This talks will assume some basic familiarity with the py.test testing framework and explore only the fixture mechanism. It will build up more complex examples which will lead up to touching on other plugin features of py.test. It is expected people will be familiar with python features like functions as first-class objects, closures etc.

Watch

Maximilien Riehl - Practical PyBuilder

Maximilien Riehl - Practical PyBuilder [EuroPython 2014] [25 July 2014] PyBuilder is a software build tool written in pure python which mainly targets pure python applications. It provides glue between existing build frameworks, thus empowering you to focus on the big picture of the build process. It will be shown through demonstrations and samples how a simple, human-readable and declarative configuration can lead to an astonishingly well-integrated build process which will make maintainers, developers and newcomers happy. ----- # Why another build tool Starting up a simple python project with best practices still takes a lot of boilerplate and glueing (e.G. chaining unit tests and integration tests in the build process, adding a linter, measuring coverage, ...). It often results in extremely ugly homebrew scripts and edge-case solutions that are not reusable. There are even programs out there (e.G. cookiecutter) that encourage boilerplate code generation! # Build orchestration PyBuilder borrows from the *maven* idea of phases (packaging, verifying, publishing, ...) to set up a fully declarative and automated build that can be run locally and remotely (build servers) in the very same way. Rather than reinventing the wheel, it provides glue between existing solutions (like unittest, coverage, flake8, ...) through a simple but powerful plugin mechanism. # The talk After a more theoretical talk with a colleague at PyConDE 2013, I want to show how it's actually like to work with *PyBuilder*. This includes * starting up a project * running builds * using plugins * writing a plugin The demo code will be made available on GitHub and I'll probably have recordings prepped in case something goes wrong. Reviewer FAQ =============== ### How does PyBuilder compare to other existing solutions like zc.buildout? As opposed to solutions like zc.buildout which focus on the *building* of complex projects (many parts, complex dependencies) PyBuilder emphasizes the full build process for very simple projects. Undoubtedly, buildout is more powerful for building in that regard and there is no reason to switch to PyBuilder. However, for simple projects (a few packages, pure python) we believe that PyBuilder is better, especially if you're starting out with Python. The plugin architecture (as opposed to recipes) makes it easier to reason about what is going on. We are able to model dependencies between build phases (like "coverage" needing "unit tests" and "packaging" needing "integration tests") where recipes are not. It also seems (after looking through the recipes available for buildout) that we have more focus on QA as part of the build process (lint code, differentiate between unit/integration tests, code analysis, ...). There is also a special focus on having the build descriptor written in Python (with fluent interfaces where possible) so that it is possible to understand what the configuration is by reading plain english, as opposed to zc.buildout (where the configuration is an ini file, or SCons which is very make-oriented). In the end, a big difference between most build tools and PyBuilder is that PyBuilder is more about orchestration. We didn't reinvent packaging or linting, we simply use what is already there (setuptools, flake8, pymetrics, ...). This allows users to use the tools they want without having to do the integration themselves, and still get a nice, unified build process out of it. A simple example : In buildout, code analysis can be done with ``` [buildout] parts += code-analysis [code-analysis] recipe = plone.recipe.codeanalysis directory = ${buildout:directory}/src ``` This is not readable IMHO. In PyBuilder it can look like this : ``` use_plugin('python.flake8') project.set_property('flake8_include_test_sources', True) project.set_property('flake8_ignore', 'E501') project.set_property('flake8_break_build', True) ``` ### "It provides glue between existing build frameworks" - which ones ? Could you name (some at least) ? Currently there is only a plugin for building with distutils/setuptools. Should that change (e.G. new contender) it would be easy to switch using PyBuilder. Examples where glue is needed : * Glue setuptools + unittest, so that no distribution can be shipped if tests fail * Glue setuptools + coverage + unittest, so that no distribution can be shipped if the statement coverage is too low (configurable ofc) * Glue setuptools + pip so that cloned projects can be built with their dependencies without needing to pip install manually

Watch

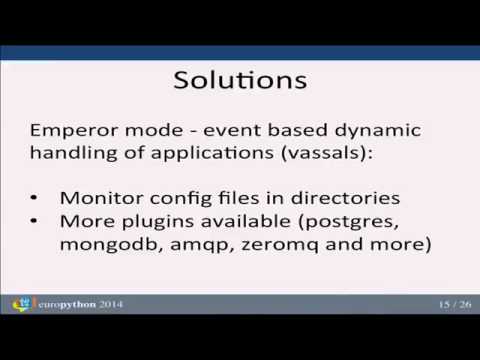

Max Tepkeev - How we switched our 800+ projects from Apache to uWSGI

Max Tepkeev - How we switched our 800+ projects from Apache to uWSGI [EuroPython 2014] [23 July 2014] During the last 7 years the company I am working for developed more than 800 projects in PHP and Python. All this time we were using Apache+nginx for hosting this projects. In this talk I will explain why we decided to switch all our projects from Apache+nginx to uWSGI+nginx and how we did that. ----- The talk will start from describing the setup we had for the last 7 years, i.e. Apache with mod_wsgi for Python projects and mod_php4/5 for PHP projects + nginx. I will explain why we used this setup for so long time, what problems we faced with this setup and what solutions we tried to solve them before switching to uWSGI. Then I will tell about uWSGI, what it is, how it works and what features it has. I will show the comparison of configuration files, how simple it is to configure uWSGI compared to Apache. Lastly I will explain how we managed to switch all our 800+ projects developed over the years in 2 different languages with 2 major versions changed (PHP4/5 and Python2/3), how this switch simplified our development and administration of this projects, the improvements we got in memory management and other areas. Of course I will concentrate mainly on our Python projects because it is EuroPython after all and not EuroPHP ;-)

Watch

Angel Ramboi - Gamers do REST

Angel Ramboi - Gamers do REST [EuroPython 2014] [22 July 2014] An overview (sprinkled with implementation details and solutions to issues we encountered) of how Demonware uses Python and Django to build RESTful APIs and how we manage to reliably serve millions of gamers all over the world that play Activision-Blizzard’s successful franchises Call of Duty and Skylanders. Topics the presentation will touch: tech stack overview; API design; configuration handling; middleware usage for logging, metrics and error handling; authentication/authorization. ----- An overview (sprinkled with implementation details and solutions to issues we encountered) of how Demonware uses Python and Django to build RESTful APIs and how we manage to reliably serve millions of gamers all over the world that play Activision-Blizzard’s successful franchises Call of Duty and Skylanders. Topics the presentation will touch: - tech stack overview - API design - configuration handling - middleware usage for logging, metrics and error handling - authentication/authorization

Watch

Maciej Dziergwa - How to become an Agile company - case study

Maciej Dziergwa - How to become an Agile company - case study [EuroPython 2014] [23 July 2014] The STX Next story has the classic arc of “zero to hero.” During the last 9 years, STX Next has grown from a small business with a handful of developers, to one of the biggest Python companies in Europe, and a leading proponent of agile and scrum methodologies. We feel that now is the best moment to share our experiences in implementing effective, agile development processes in a company of nearly 100 developers. Maciej Dziergwa will be discussing how he’s grown his business, what challenges there are today in python development, and how he plans to take his company to the next level. Especially we want to spread our ideas of building de-localized teams/frequently changing teams/teams with young members that learn rapidly making synergy effect. Join us during our Business Day on 23th July 2014! Remember that 2+2 can be much more than 4...

Watch

The Continuum Platform: Advanced Analytics and Web-based Interactive Visualization for Enterprises

Travis Oliphant - The Continuum Platform: Advanced Analytics and Web-based Interactive Visualization for Enterprises [EuroPython 2014] [24 July 2014] The people at Continuum have been involved in the Python community for decades. As a company our mission is to empower domain experts inside enterprises with the best tools for producing software solutions that deal with large and quickly-changing data. The Continuum Platform brings the world of open source together into one complete, easy-to-manage analytics and visualization platform. In this talk, Dr. Oliphant will review the open source libraries that Continuum is building and contributing to the community as part of this effort, including Numba, Bokeh, Blaze, conda, llvmpy, PyParallel, and DyND, as well as describe the freely available components of the Continuum Platform that anyone can benefit from today: Anaconda, wakari.io, and binstar.org.

Watch

Frank - Managing the Cloud with a Few Lines of Python

Frank - Managing the Cloud with a Few Lines of Python [EuroPython 2014] [23 July 2014] One of the advantages of cloud computing is that resources can be enabled or disabled dynamically. E. g. is an distributed application short on compute power one can easily add more. But who wants to do that by hand? Python is a perfect fit to control the cloud. The talk introduces the package Boto which offers an easy API to manage most of the Amazon Web Services (AWS) as well as a number of command line tools. First some usage examples are shown to introduce the concepts behind Boto. For that a few virtual hosts with different configurations are launched, and the use of the storage service S3 is briefly introduced. Based on that a scalable continuous integration system controlled by Boto is developed to show how easy all the required services can be used from Python. Most of the examples will be demonstrated during the talk. They should be easily adoptable for similar use cases or serve as an starting point for more different ones. ----- One of the advantages of cloud computing is that resources can be enabled or disabled dynamically. E. g. is an distributed application short on compute power one can easily add more. But who wants to do that by hand? Python is a perfect fit to control the cloud. The talk introduces the package Boto which offers an easy API to manage most of the Amazon Web Services (AWS) as well as a number of command line tools. First some usage examples are shown to introduce the concepts behind Boto. For that a few virtual instances with different configurations are launched, and the use of the storage service S3 is briefly introduced. Based on that a scalable continuous integration system controlled by Boto is developed to show how easy all the required services can be used from Python. Most of the examples will be demonstrated during the talk. They should be easily adoptable for similar use cases or serve as an starting point for more different ones.

Watch

Giles Thomas - An HTTP request's journey through a platform-as-a-service

Giles Thomas - An HTTP request's journey through a platform-as-a-service [EuroPython 2014] [23 July 2014] PythonAnywhere hosts tens of thousands of Python web applications, with traffic ranging from a couple of hits a week to dozens of hits a second. Hosting this many sites reliably at a reasonable cost requires a well-designed infrastructure, but it uses the same standard components as many other Python-based websites. We've built our stack on GNU/Linux, nginx, uWSGI, Redis, and Lua -- all managed with Python. In this talk we'll give a high-level overview of how it all works, by tracing how a request goes from the browser to the Python application and its response goes back again. As well as showing how a fairly large deployment works, we'll give tips on scaling and share a few insights that may help people running smaller sites discover how they can speed things up.

Watch

Maciej/Fabrizio Romano - Python Driven Company

Maciej/Fabrizio Romano - Python Driven Company [EuroPython 2014] [25 July 2014] Adopting Python across a company brings extra agility and productivity not provided by traditional mainstream tools like Excel. This is the story of programmers teaching non-programmers, from different departments, to embrace Python in their daily work. ----- By introducing ipython notebook, pandas and the other data analysis packages that make python even more accessible and attractive, we attempted to adapt python as a core technology across our whole company. We’ve challenged the dominant position of Microsoft Excel and similar tools, and dared to replace it by pandas-powered ipython notebooks. During this transitional phase, we have been inspired and sometimes forced to develop multiple packages that extend pandas, numpy etc., in order to enable our colleagues, in other departments, to access all the data they need. Moreover, we are developing several high level functionalities for the notebook environment. The notebook environment is allowing us to be extremely responsive to the changes our users are asking for, since, for part of the work, we don’t have to go through the whole traditional development process. The talk focuses on challenges and problems we’ve solved and managed in order to achieve our long term goal of creating highly agile, data-driven non-tech teams, free from the constraints imposed by mainstream technologies, and all of this thanks to python.

Watch

Federico Marani - Scaling with Ansible

Federico Marani - Scaling with Ansible [EuroPython 2014] [23 July 2014] Ansible is a powerful DevOps swiss-army knife tool, very easy to configure and with many extensions built-in. This talk will quickly introduce the basics of Ansible, then some real-life experience tips on how to use this tool, from setting up dev VMs to multi-server setups. ----- Infrastructure/Scaling is a topic really close to me, I'd like to have the chance to talk about how we set this up in the company I work for. Our infrastructure is around 10-15 servers, provisioned on different cloud providers, so a good size infrastructure. Presentation is going to be divided in 3 parts, first part is going to be focused on comparing sysadmin and devops, then there will be an introduction to the basic concepts of Ansible. I want to spend most on the last part, which is going to give some tips based on our experience with it. Many ideas will come from this presentation https://speakerdeck.com/fmarani/devops-with-ansible which i gave at DJUGL in London, with a longer session I will have more chances to delve into more detail, especially on how we use it, from vagrant boxes setup to AWS and DigitalOcean boxes, network configuration, software configurations, etc... I want to offer as many real-life tips as possible, without going too much offtopic as far as Ansible is concerned

Watch

Richard Wall - Twisted Names: DNS Building Blocks for Python Programmers

Richard Wall - Twisted Names: DNS Building Blocks for Python Programmers [EuroPython 2014] [25 July 2014] In this talk I will report on my efforts to update the DNS components of Twisted and discuss some of the things I've learned along the way. I'll demonstrate the EDNS0, DNSSEC and DANE client support which I have been working on and show how these new Twisted Names components can be glued together to build novel DNS servers and clients. Twisted is an event-driven networking engine written in Python and licensed under the open source MIT license. It is a platform for developing internet applications. ----- # Description My talk will consist of four main sections. Given the 30 minute time constraint, I may shorten or drop the two introductory parts in favour of the narrative and demonstration of interesting new APIs and code examples in the final two parts. My experience of delivering a similar talk at PyconUK 2013 is that those are the parts that will most interest the audience and prompt most audience questions. Here are my proposed sections with rough time allocations and descriptions: ## Introducing Twisted Names (0-5) Twisted includes a comprehensive set of DNS components, collectively known as Twisted Names. - <https://twistedmatrix.com/trac/wiki/TwistedNames> I will begin the talk with a quick introduction to Twisted Names and its capabilities, including one or two simple code examples. ## Introducing My Project (0-5) With generous funding from The NLnet Foundation I am adding EDNS(0) and DNSSEC client support in Twisted Names, including full DNSSEC verification and DANE support. In the talk I will quickly summarise the steps taken and lessons learned in securing that funding, and hope to encourage the audience to seek funding to support there own pet OSS projects. ## What's New in Twisted Names / Project Progress Report (10) My project plan is divided into the following broad milestones. 1. EDNS(0) 1. OPT record 2. Extended Message object with additional EDNS(0) items 3. EDNS Client 2. RRSET handling 1. Canonical Form and Order of Resource Records 2. Receiving RRSETs 3. DNSSEC 1. New DNSSEC Records and Lookup Methods 2. Security-aware Non-validating Client 3. Validating Client 4. DANE 1. A twistd dns authoritative server capable of loading and serving TLSA records. 2. A Twisted web client Agent wrapper which performs TLSA lookup and verification of a server certificate. 3. A HostnameClientEndpoint which performs TLSA lookup and verification of a server certificate. 4. A command line tool for debugging TLSA records and for verifying a certificate file against a domain name. 5. A TLSA Record class for encoding and decoding TLSA bytes. 6. A TLSA lookup method which accepts port, protocol and hostname and constructs a suitable TLSA domain name. In the talk I will quickly outline these goals, report on my progress so far, and show running code examples to demonstrate the new APIs. ## Future Developments (5) The aim of my project is to lay foundations that will eventually allow end-to-end DNSSEC verification in all the core Twisted networking components, including Twisted Conch (SSH), Mail (SMTP, POP3), Perspective Broker (RPC), Web (HTTP, XML-RPC, SOAP), Words (XMPP, IRC). Additionally I hope that this foundation work will encourage the development of end-to-end DNSSEC verification in many of the Open Source and commercial projects built on top of Twisted. I will end the talk by outlining these exciting possibilities, and demonstrate some code examples that illustrate these possibilities. ## Q & A (5-10) I'm determined to leave at least five minutes at the end for audience questions. At PyconUK 2013 I was frustrated because I ran out of time and ended up answering questions outside the lecture theatre; questions which would have been interesting to the whole audience.

Watch

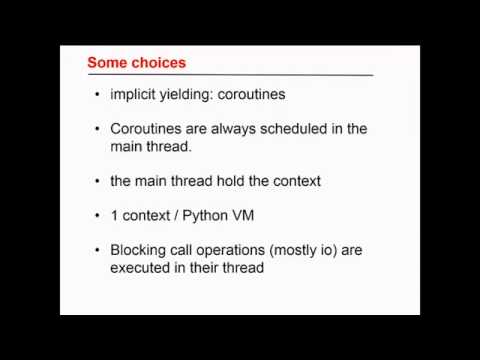

Daniel Pope - gevent: asynchronous I/O made easy

Daniel Pope - gevent: asynchronous I/O made easy [EuroPython 2014] [23 July 2014] gevent provides highly scalable asynchronous I/O without becoming a nest of callbacks, or even needing code changes. Daniel will explain how to get started with gevent, discuss patterns for its use and describe the differences with Twisted, Tornado and Tulip/asyncio. ----- It has been claimed "Callbacks are the new GOTO". Most asynchronous IO libraries use callbacks extensively. gevent (http://www.gevent.org) uses coroutines to provide highly scalable asynchronous I/O with a synchronous programming model that doesn't need code changes and callbacks. By elegantly monkey patching the Python standard library, both your code and all pure Python libraries become asynchronous too, making a separate collection of protocol implementations (in the style of Twisted) unnecessary. Code written like this is easier to understand, particularly for more junior developers. Crucially, IO errors can be raised at the right places. I will be introducing gevent's programming model, why it's easier, walk through simple code samples, and discuss experiences and metaphors for programming with it.

Watch

Ben Nuttall - Pioneering the Future of Computing Education

Ben Nuttall - Pioneering the Future of Computing Education [EuroPython 2014] [24 July 2014] How the Raspberry Pi Foundation are leading the way in the computing in schools revolution by providing affordable open and connectable hardware to people of all levels of experience. Now we have an education team, we're pushing forward with creating resources and training teachers to help deliver modern computing education around the world. All our learning resources are Creative Commons licensed and available on GitHub. We write materials that match the UK computing curriculum. ----- I'm Ben, from Raspberry Pi. I do development and outreach for the Foundation and I work with the rest of the education team to help make learning through computer science, coding and hardware hacking more accessible to all. In this talk I explain the Raspberry Pi story: its mission - the reason the Pi exists; what happened before release - getting the board in to production; what happened in the first two years - the community birth and growth; and what's coming next - education focus, new hardware and improved software. Python is the main language used (and advocated by us) in education with Raspberry Pi. We're creating learning resources to match up with the new UK computing curriculum, where we teach young people programming and computer science concepts with Python on Pi, and help teachers deliver quality material in the classroom to work towards the objectives the curriculum sets out to achieve. With Raspberry Pi we open up possibilities for connecting to the real world in an accessible way using the powerful, high level and human read/write -able language of Python. We work closely with the community: hobbyists organising Raspberry Jam events; educators teaching with Raspberry Pi; the software communities and their contributions - and we welcome any interested parties to get involved with helping us provide for the wider community.

Watch

Petr Viktorin - The Magic of Attribute Access

Petr Viktorin - The Magic of Attribute Access [EuroPython 2014] [22 July 2014] Have you ever wondered how the "self" argument appears when you call a method? Did you know there is a general mechanism behind it? Come learn all about attributes and descriptors. ----- The first part of this talk will describe what exactly happens when you read or write an attribute in Python. While this behavior is, of course, explained in the Python docs, more precisely in the [Data model][1] section and [related][2] [writeups][3], the documentation gives one a "bag of tools" and leaves combining them to the reader. This talk, on the other hand, will present one chunk of functionality, the attribute lookup, and show how its mechanisms and customization options work together to provide the flexibility (and gotchas) Python provides. The topics covered will be: * method resolution order, with a nod to the C3 algorithm * instance-, class-, and metaclass-level variables * `__dict__` and `__slots__` * data/non-data descriptors * special methods (`__getattr__`, `__getattribute__`, `__setattr__`, `__dir__`) In the second part of the talk, I will show how to use the customization primitives explained before on several interesting and/or useful examples: * A proxy object using `__getattr__` * Generic desciptor - an ORM column sketch * the rudimentary `@property`, method, `staticmethod` reimplemented in pure Python (explained [here][2] and elsewhere), which lead to * SQLAlchemy's [`@hybrid_proprerty`][4] * Pyramid's deceptively simple memoizing decorator, [`@reify`][5] * An ["Unpacked" tuple properties][6] example to drive home the idea that descriptors can do more than provide attribute access (and mention weak dicts as a way to non-intrusively store data on an object) (These are subject to change as I compose the talk. Also some examples may end up interleaved with the theory.) Hopefully I'll have time to conclude with a remark about how Python manages to be a "simple language" despite having these relatively complex mechanisms. [1]: http://docs.python.org/3/reference/datamodel.html [2]: http://docs.python.org/3/howto/descriptor.html [3]: https://www.python.org/download/releases/2.3/mro/ [4]: http://docs.sqlalchemy.org/en/rel_0_9/orm/extensions/hybrid.html [5]: http://docs.pylonsproject.org/projects/pyramid/en/latest/api/decorator.html [6]: https://gist.github.com/encukou/9789993

Watch

Pieter Hintjens - Our decentralized future

Pieter Hintjens - Our decentralized future [EuroPython 2014 Keynote] [23 July 2014] Pieter will talk about the urgent push towards a decentralized future. As founder of the ZeroMQ community, he will explain the vision, design and reality of distributed software systems. He’ll explain his view on the community itself, also a highly decentralized “Living System”, as Hintjens calls it. Finally he’ll talk about edgenet, a model for a decentralized Internet.

Watch

Bob Ippolito - What can python learn from Haskell?

Bob Ippolito - What can python learn from Haskell? [EuroPython 2014 Keynote] [21 July 2014] What can we learn from Erlang or Haskell for building reliable high concurrency services? Bob was involved in many Python projects but argues that for some domains there may be better methods found elsewhere. He started looking for alternatives back in 2006 when building high concurrency services at Mochi Media (originally with Twisted), which led him to the land of Erlang and later Haskell. Bob is going to talk about what he learned along the way. In particular, he’ll cover some techniques that are used in functional programming languages and how they can be used to solve problems in more performant, robust and/or concise ways than the standard practices in Python. He is also going to discuss some potential ways that the Python language and its library ecosystem could evolve accordingly. ----- What can we learn from Erlang or Haskell for building reliable high concurrency services? Bob was involved in many Python projects but argues that for some domains there may be better methods found elsewhere. He started looking for alternatives back in 2006 when building high concurrency services at Mochi Media (originally with Twisted), which led him to the land of Erlang and later Haskell. Bob is going to talk about what he learned along the way. In particular, he’ll cover some techniques that are used in functional programming languages and how they can be used to solve problems in more performant, robust and/or concise ways than the standard practices in Python. He is also going to discuss some potential ways that the Python language and its library ecosystem could evolve accordingly.

Watch

Emily Bache - Will I still be able to get a job in 2024 if I don't do TDD?

Emily Bache - Will I still be able to get a job in 2024 if I don't do TDD? [EuroPython 2014 Keynote] [22 July 2014] Geoffrey Moores's book "Crossing the chasm" outlines the difficulties faced by a new, disruptive technology, when adoption moves from innovators and visionaries into the mainstream. Test Driven Development is clearly a disruptive technology, that changes the way you approach software design and testing. It hasn't yet been embraced by everyone, but is it just a matter of time? Ten years from now, will a non-TDD practicing developer experience the horror of being labelled a technology adoption 'laggard', and be left working exclusively on dreadfully boring legacy systems? It could be a smart move to get down to your nearest Coding Dojo and practice TDD on some Code Katas. On the other hand, the thing with disruptive technologies is that even they can become disrupted when something better comes along. What about Property-Based Testing? Approval Testing? Outside-In Development? In this talk, I'd like to look at the chasm-crossing potential of TDD and some related technologies. My aim is that both you and I will still be able to get a good job in 2024. ----- TDD hasn't yet been embraced by everyone, but is it just a matter of time? Ten years from now, will a non-TDD practicing developer experience the horror of being labelled a technology adoption 'laggard', and be left working exclusively on dreadfully boring legacy systems? It could be a smart move to get down to your nearest Coding Dojo and practice TDD on some Code Katas. On the other hand, the thing with disruptive technologies is that even they can become disrupted when something better comes along. What about Property-Based Testing? Approval Testing? Outside-In Development? In this talk, I'd like to look at the chasm-crossing potential of TDD and some related technologies. My aim is that both you and I will still be able to get a good job in 2024.

Watch

Travis Oliphant - Python's Role in Big Data Analytics: Past, Present, and Future

Travis Oliphant - Python's Role in Big Data Analytics: Past, Present, and Future [EuroPython 2014 Keynote] [25 July 2014] Python has had a long history in Scientific Computing which means it has had the fundamental building blocks necessary for doing Data Analysis for many years. As a result, Python has long played a role in scientific problems with the largest data sets. Lately, it has also grown in traction as a tool for doing rapid Data Analysis. As a result, Python is the center of an emerging trend that is unifying traditional High Performance Computing with "Big Data" applications. In this talk I will discuss the features of Python and its popular libraries that have promoted its use in data analytics. I will also discuss the features that are still missing to enable Python to remain competitive and useful for data scientists and other domain experts. Finally, will describe open source projects that are currently occupying my attention which can assist in keeping Python relevant and even essential in Data Analytics for many years to come.

Watch

Constanze Kurz - One year of Snowden, what's next?

Constanze Kurz - One year of Snowden, what's next? [EuroPython 2014 Keynote] [21 July 2014] Since June 2013, disclosed by Edward Snowden, we learn more and more facts about American and British spies’ deep appetite for information, economic spying and the methods they use to collect data. They systematically tapped international communications on a scale that only few people could imagine. But what are the consequences for societies when they now know about the NSA metadata repository capable of taking in billions of "events" daily to collected and analyze? Is there a way to defend against an agency with a monstrous secret budget?

Watch

Christian Tismer/Anselm Kruis - Stackless: Recent advancements and future goals

Christian Tismer/Anselm Kruis - Stackless: Recent advancements and future goals [EuroPython 2014] [23 July 2014] Stackless (formerly known as Stackless-Python) is an enhanced variant of the Python-language. Stackless is best known for its lightweight microthreads. But that's not all. In this talk Stackless core developers demonstrate recent advancements regarding multi-threading, custom-scheduling, debugging with Stackless and explain future plans for Stackless. ----- Stackless: Recent advancements and future goals ------------------------------------------------------- Since Python release 1.5 Stackless Python is an enhanced variant of C-Python. Stackless is best known for its addition of lightweight microthreads (tasklets) and channels. Less known are the recent enhancements that became available with Stackless 2.7.6. In this talk core Stackless developers demonstrate * The improved multi-threading support * How to build custom scheduling primitives based on atomic tasklet operations * The much improved debugger support * ... Stackless recently switched the new master repository from hg.python.org/stackless to bitbucket to allow for a more open development process. We'll summarise our experience and discuss our plans for the future development of Stackless. The talk will be help by Anselm Kruis and Christian Tismer. If we are lucky, we will also welcome Kristján Valur Jónsson from Iceland.

Watch

Sarah Mount - Message-passing concurrency for Python

Sarah Mount - Message-passing concurrency for Python [EuroPython 2014] [22 July 2014] Concurrency and parallelism in Python are always hot topics. This talk will look the variety of forms of concurrency and parallelism. In particular this talk will give an overview of various forms of message-passing concurrency which have become popular in languages like Scala and Go. A Python library called python-csp which implements similar ideas in a Pythonic way will be introduced and we will look at how this style of programming can be used to avoid deadlocks, race hazards and "callback hell". ----- Concurrency and parallelism in Python are always hot topics. Early Python versions had a threading library to perform concurrency over operating system threads, Python version 2.6 introduced the multiprocessing library and Python 3.2 has introduced a futures library for asynchronous tasks. In addition to the modules in the standard library a number of packages such as gevent exist on PyPI to implement concurrency with "green threads". This talk will look the variety of forms of concurrency and parallelism. When are the different libraries useful and how does their performance compare? Why do programmers want to "remove the GIL" and why is it so hard to do? In particular this talk will give an overview of various forms of message-passing concurrency which have become popular in languages like Scala and Go. A Python library called python-csp which implements similar ideas in a Pythonic way will be introduced and we will look at how this style of programming can be used to avoid deadlocks, race hazards and "callback hell".

Watch

Designing NRT(NearRealTime) stream processing systems: Using python with Storm and Kafka

konarkmodi - Designing NRT(NearRealTime) stream processing systems: Using python with Storm and Kafka [EuroPython 2014] [22 July 2014] The essence of near-real-time stream processing is to compute huge volumes of data as it is received. This talk will focus on creating a pipeline for collecting huge volumes of data using Kafka and processing for near-real time computations using Storm.

Watch

Design considerations while Evaluating, Developing, Deploying a distributed task processing system

konarkmodi - Design considerations while Evaluating, Developing, Deploying a distributed task processing system [EuroPython 2014] [23 July 2014] With the growing world of web, there are numerous use-cases which require tasks to be executed in an asynchronous manner and in a distributed fashion. Celery is one of the most robust, scalable, extendable and easy-to-implement frameworks available for distributed task processing. While developing applications using Celery, I have had considerable experience in terms of what design choices one should be aware of while evaluating an existing system or developing one's own system from scratch.

Watch

Chris Clauss - Pythonista: A full-featured Python environment for iOS devices

Chris Clauss - Pythonista: A full-featured Python environment for iOS devices [EuroPython 2014] [22 July 2014] The Pythonista app delivers a full-featured Python development experience on an iPad or an iPhone. This introduction to the app will provide a rapid overview of the Pythonista user experience, features and Community Forum. Then it will focus on a few source code examples of using the GPS to deliver real-time local weather, use the image library to manipulate images and convert documents, use the gyroscope to understand pitch, yaw, and roll, use Dropbox to backup and restore scripts, images, etc.

Watch

Dougal Matthews - Using asyncio (aka Tulip) for home automation

Dougal Matthews - Using asyncio (aka Tulip) for home automation [EuroPython 2014] [25 July 2014] This talk will cover the new asyncio library in Python 3.4 (also known as Tulip) and will use the area of home automation as a case study to explore its features. This talk will be based on code using Python 3.3+. Home automation is a growing area and the number of devices and potential applications is huge. From monitoring electricity usage to the temperature inside or outside your house to remote control of lights and other appliances the options are almost endless. However, managing and monitoring these devices is typically a problem that works best with event driven applications. This is where asnycio comes in, it was originally proposed in PEP 3156 by our BDFL, Guido van Rossum. Asyncio aims to bring a clear approach to the python ecosystem and borrows from a number of existing solutions to come up with something clean and modern for the Python stdlib. ----- This talk will cover the new asyncio library in Python 3.4 (also known as Tulip) and will use the area of home automation as a case study to explore its features. This talk will be based on code using Python 3.3+. Home automation is a growing area and the number of devices and potential applications is huge. From monitoring electricity usage to the temperature inside or outside your house to remote control of lights and other appliances the options are almost endless. However, managing and monitoring these devices is typically a problem that works best with event driven applications. This is where asnycio comes in, it was originally proposed in PEP 3156 by our BDFL, Guido van Rossum. Asyncio aims to bring a clear approach to the python ecosystem and borrows from a number of existing solutions to come up with something clean and modern for the Python stdlib. This talk will introduce asyncio and use it within the context of home automation and dealing with multiple event driven devices. Therefore we will cover asyncio and the lessions learned from using different devices in this context. Some of the devices that will be used include: - Raspberry Pi - RFXCom's RFXtrx, USB serial tranciever. - Owl CM160 electricity tracker. - Oregon scientific thermometers. - Foscam IP cameras. This talk will also briefly cover the previous solution I used which was developed with Twisted and compare it briefly with my new code using asyncio.

Watch

Marc-Andre Lemburg - Advanced Database Programming with Python

Marc-Andre Lemburg - Advanced Database Programming with Python [EuroPython 2014] [25 July 2014] The Python DB-API 2.0 provides a direct interface to many popular database backends. It makes interaction with relational database very straight forward and allows tapping into the full set of features these databases provide. The talk will cover advanced database topics which are relevant in production environments such as locks, distributed transactions and transaction isolation. ----- The Python DB-API 2.0 provides a direct interface to many popular database backends. It makes interaction with relational database very straight forward and allows tapping into the full set of features these databases provide. The talk will cover advanced database topics which are relevant in production environments such as locks, distributed transactions and transaction isolation. ---- The talk will give an in-depth discussion of advanced database programming topics based on the Python DB-API 2.0: locks and dead-locks, two-phase commits, transaction isolation, result set scrolling, schema introspection and handling multiple result sets. Talks slides are available on request.

Watch

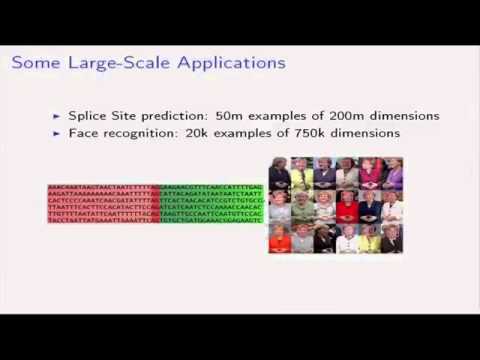

Heiko - The Shogun Machine Learning Toolbox

Heiko - The Shogun Machine Learning Toolbox [EuroPython 2014] [24 July 2014] We present the Shogun Machine Learning Toolbox, a framework for Machine Learning, which is the art of finding structure in data, with applications in object recognition, brain-computer interfaces, robotics, stock-prices prediction, etc. We give a gentle introduction to ML and Shogun's Python interface, focussing on intuition and visualisation. ----- We present the Shogun Machine Learning Toolbox, a unified framework for Machine Learning algorithms. Machine Learning (ML) is the art of finding structure in data in an automated way and has given rise to a wide range of applications such as recommendation systems, object recognition, brain-computer interfaces, robotics, predicting stock prices, etc. Our toolbox offers extensive bindings with other software and computing languages, Python being the major target. The library was initiated in 1999 and remained under heavy development henceforth. In addition to its mature core-framework, Shogun offers state-of-the-art techniques based on latest ML research. This is partly made possible by the 21 Google Summer of Code projects (5+8+8 since 2011) that our students successfully completed. Shogun's codebase has >20k commits made by >100 contributors representing >500k lines of code. While its core is written in C++, a unique of technique for generating interfaces allows usage from a wide range of target languages -- under the same syntax. This includes in particular Python, but also Matlab/Octave, Java, C#, R, ruby, and more. We believe that users should be able to choose their favourite language rather than us dictating this choice. The same applies for supported OS (Linux, Mac, Win). Shogun is part of Debian Linux. Features of Shogun include most classical ML methods such as classification, regression, dimensionality reduction, clustering, etc, most of them in different flavours. All implemented algorithms in Shogun work on a modular data representation, which allows to easily switch between different sorts of objects as for example strings or matrices. Common ML-tasks and data IO can be carried under a unified interface. This is also true for the various external open-source libraries that are embedded within Shogun. Code examples are provided for all implemented algorithms. The main and most complete set of examples is in the Python language. In addition, in order to push usage of Shogun in education at universities, we recently started adding more illustrative IPython notebooks. A growing list of statically rendered versions are readily available from our [website](http://www.shogun-toolbox.org/page/documentation/notebook) and implement a cross-over of tutorial-style explanations, code, and visualization examples. We even took this up a notch and started building our own IPython-notebook server with Shogun installed in the cloud at (try cloud button in notebook view) . This allows users to try Shogun without installation via the IPython notebook web interface. All example notebooks can be loaded, interactively modified, and executed. In addition, using the Python Django framework, we built a collection of interactive web-demos where users can play around with basic ML algorithms, [demos](http://www.shogun-toolbox.org/page/documentation/demo) In the proposed talk, we will give a gentle and general introduction to ML and the core functionality of Shogun, with a focus on its Python interface. This includes solving basic ML tasks such as classification and regression and some of the more recent features, such as last year's GSoC projects and their IPython notebook writeups. ML material will be presented with a focus on intuition and visualisation and no previous familiarity with ML methods is required. ## Key points in the talk * What are the goals in ML? * Example problems in ML (classification, regression, clustering) * Some basic algorithm ideas * Focus on Visualisation, not Maths ## Intended Audience * All people dealing with data (data scientists, big-data hackers) who are looking for tools to deal with it * People with a general interest but no education in Machine Learning * People interested in the technology behind Shogun (swig, cloud notebook server, web-demos) * People from the ML community (scipy-stack) * ML scientists/Statisticians ## Code examples * [Classification](https://github.com/shogun-toolbox/shogun/blob/develop/examples/undocumented/python_modular/classifier_libsvm_modular.py) * [Clustering](https://github.com/shogun-toolbox/shogun/blob/develop/examples/undocumented/python_modular/graphical/em_2d_gmm.py) * [Source seperation](https://github.com/shogun-toolbox/shogun/blob/develop/examples/undocumented/python_modular/graphical/converter_jade_bss.py) * [IPython notebook examples](http://www.shogun-toolbox.org/page/documentation/notebook) ### Slide examples See our Europython 2010 [slides](https://www.drop

Watch

Andreas Pelme - Introduction to pytest

Andreas Pelme - Introduction to pytest [EuroPython 2014] [23 July 2014] pytest is a full featured testing tool that makes it possible to write “pythonic” tests. This talk will introduce pytest and some of its unique and innovative features. It will help you get started with pytest for new or existing projects, by showing basic usage and configuration. ----- This talk will show introduce pytest and show some unique and innovative features. It will show how to get started using it and some of the most important features. One of these features is the ability to write tests in a more “pythonic” way by using the assert statement for assertions. Another feature in pytest is fixtures – a way to handle test dependencies in a structured way. This talk will introduce the concept of fixtures and show how they can be used. No previous knowledge of pytest is required – this talk is for people who are new to testing or has experience with other Python testing tools such as unittest or Nose.

Watch

Markus Zapke-Gründemann - Writing multi-language documentation using Sphinx

Markus Zapke-Gründemann - Writing multi-language documentation using Sphinx [EuroPython 2014] [23 July 2014] How to write multi-language documentation? What tools can you use? What mistakes should you avoid? This talk is based on the experiences I gathered while working on several multi-language documentation projects using Sphinx. ----- Internationalized documentation is a fairly new topic. And there are different approaches to do this. I will talk about how Sphinx internationalization support works, which tools and services I use and how to organize the translation workflow in an Open Source project. Finally I will have a look at what the future of internationalization in Sphinx might bring.

Watch

Reimar Bauer - pymove3D - Python moves the world - Attractive programming for young people.

Reimar Bauer - pymove3D - Python moves the world - Attractive programming for young people. [EuroPython 2014] [22 July 2014] The second time a contest for schoolar students is organized by the Python Software Verband e.V.. It is about to write a Python program that is executable in Blender using its 3D capabilities. The talk overall gives an overview what experience we got by these ideas and how we want to continue.

Watch

Robert Lehmann - Teaching Python

Robert Lehmann - Teaching Python [EuroPython 2014] [22 July 2014] Using Python in bringing people closer to programming has been popular for a while. But what are the most effective ways to do so? The OpenTechSchool reports. ----- Python has been used in educational programmes ever since. With a bandwidth that large, navigating the landscape of Python tutorials is hard indeed. This talk will look at successful Python teaching material. From the numerous iterations our material has gone through, we draw conclusions on what's crucial in teaching Python. It will introduce how the OpenTechSchool is teaching Python and what measures it found most effective in spreading programming in general and Python in particular. Among these are rapid feedback, supervised learning, localization, and knowing your target audience. The author is a member of the OpenTechSchool, a free community initiative which offers Python workshop on a number of topics. Some of the workshops have been running for more than two years now. He has written the first versions of "Python for beginners," a workshop which has been used in many cities to teach Python to programming novices.

Watch

Mislav Stipetic/Darko Ronić - Mobile Games to the Cloud With Python

Mislav Stipetic/Darko Ronić - Mobile Games to the Cloud With Python [EuroPython 2014] [22 July 2014] When a mobile game development company decides to switch to a more cloud based development it is faced with obstacles different from those it’s used to on mobile devices. This talk explains how Python provided us with most of the infrastructure for this task and how a Python game backend was built as a result. ----- #### The Talk This talk has two goals. Showing the audience the lessons we learned during a project which moved a simple mobile game to a server backend is our first intention. In addition to that we want to describe how such a system works in a real life example, to show which problems and which requirements arise in its creation. When the audience leaves the talk they will know how a real-life mobile game uses Python for powering the backend servers. #### The Problem Most of the game development for mobile devices is focused on running the game on the device. The game designers and game developers play a primary role in creating the product. The server backend plays a supporting role providing a multiplayer or social experience to the users. Indeed, at Nanobit Ltd., things were also done that way. We had a small Python infrastructure built around Django which provided a small portion of multiplayer experience for the players. The majority of development was still focused on playing the game on the device. That way of thinking was put to test when we decided to center our future games around the multiplayer experience. Due to the fact that our infrastructure at the time was not enough for what we had in mind, we had to start from scratch. The decision was made to use Python as the center of our new infrastructure. In order to achieve it, a server backend was needed that would allow the game to be played “in the cloud” with the device only being a terminal to the player. Most of the game logic would have to be processed in the cloud which meant that each player required a constant connection to the backend and with over 100.000 players in our previous games that presented a challenge. How to build an infrastructure which can support that? Since every user action had to be sent to the backend how to process thousands of them quick enough? Those problems were big and were just the start. #### The Solution The design of the backend lasted for a couple of months and produced a scalable infrastructure based on “workers” developed in Python, “web servers” that use Tornado and a custom message queue which connected the two. The storage part is a combination of Riak and Redis. Since the backend is scalable new workers and new web servers had to be deployed easily so an orchestration module was build using Fabric. The scalability and launching of new workers and web servers was achieved using Docker for creation and deployment of containers. Each container presents one module of the system (worker, web server, queue). The end result can now support all of our future games and only requires the game logic of each game to be added to the workers. #### The Technologies * Python for coding the game logic, web servers. More than 90% of the system was written in Python. * Fabric * SQLAlchemy * Riak * Redis * ZeroMQ * nginx * Docker * Websockets * AWS #### The Lessons Learned * How to tune the backend to handle the increasing number of active players. * How to tackle the problem of frequent connection dropping and reachability issues of poor mobile device Internet connection in Tornado with a little help of Redis. * How to prevent users from trying to outsmart the system by denying illegal moves. * How to enable game profile syncing and live updating. * Improving the performance of workers by prioritizing data being stored to databases (Riak, SQL). * New issues and lessons show up all the time so there will definitely be more of them by the time of the conference. #### Basic Outline 1. Intro (5 min) 1. Who are we? 2. How was Python used in our previous games 3. Why we decided to change it all 2. Requirements (6 min) 1. What was the goal of creating the game backend 2. Why was Python our first choice 3. Python backend (14 min) 1. The architecture of the backend 2. Which technologies did we use and how were they connected together 3. How the backend handles the game logic 4. Lessons learned 4. Questions & Answers (5 min)

Watch

Josef Heinen - Scientific Visualization with GR

Josef Heinen - Scientific Visualization with GR [EuroPython 2014] [25 July 2014] Python developers often get frustrated when managing visualization packages that cover the specific needs in scientific or engineering environments. The GR framework could help. GR is a library for visualization applications ranging from publication-quality 2D graphs to the creation of complex 3D scenes and can easily be integrated into existing Python environments or distributions like Anaconda. ----- Python has long been established in software development departments of research and industry, not least because of the proliferation of libraries such as *SciPy* and *Matplotlib*. However, when processing large amounts of data, in particular in combination with GUI toolkits (*Qt*) or three-dimensional visualizations (*OpenGL*), it seems that Python as an interpretative programming language may be reaching its limits. --- *Outline* - Introduction (1 min) - motivation - GR framework (2 mins) - layer structure - output devices and capabilities - GR3 framework (1 min) - layer structure - output capabilities (3 mins) - high-resolution images - POV-Ray scenes - OpenGL drawables - HTML5 / WebGL - Simple 2D / 3D examples (2 min) - Interoperability (PyQt/PySide, 3 min) - How to speed up Python scripts (4 mins) - Numpy - Numba (Pro) - Animated visualization examples (live demos, 6 mins) - physics simulations - surfaces / meshes - molecule viewer - MRI voxel data - Outlook (1 min) *Notes* Links to similar talks, tutorials or presentations can be found [here][1]. Unfortunately, most of them are in German language. The GR framework has already been presented in a talk at PyCon DE [2012][2] and [2013][3], during a [poster session][4] at PyCon US 2013, and at [PythonCamps 2013][5] in Cologne. The slides for the PyCon.DE 2013 talk can be found [here][6]. As part of a collaboration the GR framework has been integrated into [NICOS][7] (a network-based control system completely written in Python) as a replacement for PyQwt. [1]: http://gr-framework.org/ [2]: https://2012.de.pycon.org/programm/schedule/sessions/54 [3]: https://2013.de.pycon.org/schedule/sessions/45/ [4]: https://us.pycon.org/2013/schedule/presentation/158/ [5]: http://josefheinen.de/rasberry-pi.html [6]: http://iffwww.iff.kfa-juelich.de/pub/doc/PyCon_DE_2013 [7]: http://cdn.frm2.tum.de/fileadmin/stuff/services/ITServices/nicos-2.0/dirhtml/

Watch

andrea crotti - Metaprogramming, from decorators to macros

andrea crotti - Metaprogramming, from decorators to macros [EuroPython 2014] [24 July 2014] Starting off with the meaning of metaprogramming we quickly dive into the different ways Python allows this. First we talk about class and functions decorators, when decorators are not enough anymore we'll explore the wonders of metaclasses. In the last part of the talk we'll talk about macros, first in Lisp and then using the amazing macropy library. ----- This talk is a journey in the wonderful world of metaprogramming. We start off with the meaning of metaprogramming and what it can be used for. Then we look at what can be done in Python, introducing function and class decorators. When decorators are not enough anymore we move to the black magic of metaclasses, showing how we can implemement a simple Django-like model with them. In the bonus track we'll talk about macros, as the ultimate metaprogramming weapon, showing briefly how Lisp macros work and introducing the amazing [macropy library](https://github.com/lihaoyi/macropy).

Watch

Dave Halter - Identifying Bugs Before Runtime With Jedi

Dave Halter - Identifying Bugs Before Runtime With Jedi [EuroPython 2014] [23 July 2014] Finding bugs before runtime has been an incredibly tedious task in Python. Jedi is an autocompletion library with interesting capabilities: It understands a lot of the dynamic features of Python. I will show you how we can use the force of (the) Jedi to identify bugs in your Python code. It's not just another pylint. It's way better. ----- Jedi is an autocompletion library for Python that has gained quite a following over the last year. There are a couple of plugins for the most popular editors (VIM, Sublime, Emacs, etc.) and mainstream IDEs like Spyder are switching to Jedi. Jedi basically tries to redefine the boundaries of autocompletion in dynamic languages. Most people still think that there's no hope for decent autocompletion in Python. This talk will try to argue the opposite, that decent autocompletion is very close. While the first part will be about Jedi, the second part of this talk will discuss the future of dynamic analysis. Dynamic Analysis is what I call the parts that static analysis doesn't cover. The hope is to generate a kind of "compiler" that doesn't execute code but reports additional bugs in your code (AttributeErrors and the like). I still have to work out the details of the presentation. I also have to add that Jedi I'm currently working full-time on Jedi and that there's going to be some major improvements until the conference. Autocompletion and static/dynamic analysis as well as refactoring are hugely important tools for a dynamic language IMHO, because they can improve the only big disadvantage compared to static languages: Finding bugs before running your tool.

Watch

Valerio Maggio - Scikit-learn to "learn them all"

Valerio Maggio - Scikit-learn to "learn them all" [EuroPython 2014] [24 July 2014] Scikit-learn is a powerful library, providing implementations for many of the most popular machine learning algorithms. This talk will provide an overview of the "batteries" included in Scikit-learn, along with working code examples and internal insights, in order to get the best for our machine learning code. ----- **Machine Learning** is about *using the right features, to build the right models, to achieve the right tasks* [[Flach, 2012]][0] However, to come up with a definition of what actually means **right** for the problem at the hand, it is required to analyse huge amounts of data, and to evaluate the performance of different algorithms on these data. However, deriving a working machine learning solution for a given problem is far from being a *waterfall* process. It is an iterative process where continuous refinements are required for the data to be used (i.e., the *right features*), and the algorithms to apply (i.e., the *right models*). In this scenario, Python has been found very useful for practitioners and researchers: its high-level nature, in combination with available tools and libraries, allows to rapidly implement working machine learning code without *reinventing the wheel*. [**Scikit-learn**](http://scikit-learn.org/stable/) is an actively developing Python library, built on top of the solid `numpy` and `scipy` packages. Scikit-learn (`sklearn`) is an *all-in-one* software solution, providing implementations for several machine learning methods, along with datasets and (performance) evaluation algorithms. These "batteries" included in the library, in combination with a nice and intuitive software API, have made scikit-learn to become one of the most popular Python package to write machine learning code. In this talk, a general overview of scikit-learn will be presented, along with brief explanations of the techniques provided out-of-the-box by the library. These explanations will be supported by working code examples, and insights on algorithms' implementations aimed at providing hints on how to extend the library code. Moreover, advantages and limitations of the `sklearn` package will be discussed according to other existing machine learning Python libraries (e.g., [`shogun`](http://shogun-toolbox.org "Shogun Toolbox"), [`pyML`](http://pyml.sourceforge.net "PyML"), [`mlpy`](http://mlpy.sourceforge.net "MLPy")). In conclusion, (examples of) applications of scikit-learn to big data and computational intensive tasks will be also presented. The general outline of the talk is reported as follows (the order of the topics may vary): * Intro to Machine Learning * Machine Learning in Python * Intro to Scikit-Learn * Overview of Scikit-Learn * Comparison with other existing ML Python libraries * Supervised Learning with `sklearn` * Text Classification with SVM and Kernel Methods * Unsupervised Learning with `sklearn` * Partitional and Model-based Clustering (i.e., k-means and Mixture Models) * Scaling up Machine Learning * Parallel and Large Scale ML with `sklearn` The talk is intended for an intermediate level audience (i.e., Advanced). It requires basic math skills and a good knowledge of the Python language. Good knowledge of the `numpy` and `scipy` packages is also a plus. [0]: http://goo.gl/BnhoHa "Machine Learning: The Art and Science of Algorithms that Make Sense of Data, *Peter Flach, 2012*"

Watch

synasius - How to make a full fledged REST API with Django OAuth Toolkit

synasius - How to make a full fledged REST API with Django OAuth Toolkit [EuroPython 2014] [22 July 2014] World is going mobile and the need of a backend talking with your apps is getting more and more important. What if I told you writing REST APIs in Python is so easy you don’t need to be a backend expert? Take generous tablespoons of Django, mix thoroughly with Django REST Framework and dust with Django OAuth Toolkit to bake the perfect API in minutes.

Watch

Thomas Wiecki - Probabilistic Programming in Python

Thomas Wiecki - Probabilistic Programming in Python [EuroPython 2014] [24 July 2014] Probabilistic Programming allows flexible specification of statistical models to gain insight from data. The high interpretability and ease by which different sources can be combined has huge value for Data Science. PyMC3 features next generation sampling algorithms, an intuitive model specification syntax, and just-in-time compilation for speed, to allow estimation of large-scale probabilistic models. ----- Probabilistic Programming allows flexible specification of statistical models to gain insight from data. Estimation of best fitting parameter values, as well as uncertainty in these estimations, can be automated by sampling algorithms like Markov chain Monte Carlo (MCMC). The high interpretability and flexibility of this approach has lead to a huge paradigm shift in scientific fields ranging from Cognitive Science to Data Science and Quantitative Finance. PyMC3 is a new Python module that features next generation sampling algorithms and an intuitive model specification syntax. The whole code base is written in pure Python and Just-in-time compiled via Theano for speed. In this talk I will provide an intuitive introduction to Bayesian statistics and how probabilistic models can be specified and estimated using PyMC3.

Watch

Simon Cross - Conversing with people living in poverty

Simon Cross - Conversing with people living in poverty [EuroPython 2014] [24 July 2014] Vumi is a text messaging system designed to reach out to those in poverty on a massive scale via their mobile phones. It's written in Python using Twisted. This talk is about how and why we built it and how you can join us in making the world a better place. ----- 43% of the world's population live on less than €1.5 per day. The United Nations defines poverty as a "lack of basic capacity to participate effectively in society". While we often think of the poor as lacking primarily food and shelter, the UN definition highlights their isolation. They have the least access to society's knowledge and services and the most difficulty making themselves and their needs heard in our democracies. While smart phones and an exploding ability to collect and process information are transforming our access to knowledge and the way we organize and participate in our societies, those living in poverty have largely been left out. This has to change. Basic mobile phones present an opportunity to effect this change [3]. Only three countries in the world have fewer than 65 mobile phones per 100 people [4]. The majority of these phones are not Android or iPhones, but they do nevertheless provide a means of communication -- via voice calls, SMSes [6], USSD [7] and instant messaging. By comparison, 25 countries have less than 5% internet penetration [5]. Vumi [1] is an open source text messaging system designed to reach out to those in poverty on a massive scale via their mobile phones. It's written in Python using Twisted. Vumi is already used to: * provide Wikipedia access over USSD and SMS in Kenya [8]. * register a million voters in Libya [10]. * deliver health information to mothers in South Africa [9]. * prevent election violence in Kenya [11]. This talk will cover: * a brief overview of mobile networking and cellphone use in Africa * why we built Vumi * the challenges of operating in unreliable environments * an overview of Vumi's features and architecture * how you can help! Vumi features some cutting edge design choices: * horizontally scalable Twisted processes communicating using RabbitMQ. * declarative data models backed by Riak. * sharing common data models between Django and Twisted. * sandboxing hosted Javascript code from Python. Overview of challenges Vumi addresses: *Scalability*: Vumi needs to support both small scale applications (demos, pilot projects, applications tailored for a particular community) and large ones (things that everyone within a country might use). We address this using Twisted workers that exchange messages via RabbitMQ and store data in Riak. Having projects share RabbitMQ and Riak instances significantly reduces the overhead for small projects (e.g. its not cost effective to launch the recommended minimum of 5 Riak servers for a small project). *Barriers to entry*: Often the people with good ideas don't have access to one of many things needed to run a production system themselves, e.g. capital, time, stable infrastructure. We address this by providing a hosted Vumi instance that runs sandboxed Javascript applications. All the application author needs is their idea, the ability to write Javascript and upload it to our servers. The target audience here is African entrepreneurs at incubator spaces like iHub (Nairobi), kLab (Kigali), BongoHive (Lusaka) and JoziHub (Johannesburg). *Unreliable third-party systems*: It's one thing for parts of ones own system to go down, it's another for crucial third-party systems to go down. Vumi takes an SMTP-like approach to solving this and uses persistent queues so that messages can back up in the queue while third-party systems are down and be processed when they become available again. We also feedback information on whether third-party messaging systems have accepted or reject messages to the application that initiated them. Vumi is developed by the Praekelt Foundation [2] (and individual contributors!). [1]: <http://vumi.org/> "Vumi" [2]: <http://praekeltfoundation.org/> "Praekelt Foundation" [3]: <http://www.youtube.com/watch?v=0bXjgx4J0C4#t=20> "Spotlight on Africa" [4]: <http://en.wikipedia.org/wiki/List_of_countries_by_number_of_mobile_phones_in_use> [5]: <http://en.wikipedia.org/wiki/List_of_countries_by_number_of_Internet_users> [6]: <http://en.wikipedia.org/wiki/Short_Message_Service> [7]: <http://en.wikipedia.org/wiki/Unstructured_Supplementary_Service_Data [8]: <http://blog.praekeltfoundation.org/post/65981723628/wikipedia-zero-over-text-with-praekelt-foundation> [9]: <http://blog.praekeltfoundation.org/post/65042080515/mama-launches-healthy-family-nutrition-programme> [10]: <http://www.libyaherald.com/2014/01/01/over-one-million-register-for-constitutional-elections-on-final-sms-registration-day

Watch

Magdalena Rother - How to become a software developer in science?

Magdalena Rother - How to become a software developer in science? [EuroPython 2014] [24 July 2014] My path from 'Hello world' to software development was long and hard. The approach I learned during my research may help you to create high quality software and improve as a developer. The talk covers how you can benefit from your non-IT knowledge, atomize your project and how collaboration accelerates your learning. ----- **Goal**: give practical tools for improving skills and software quality to people with a background other than IT. Eight years ago, as a plant biologist, I knew almost nothing about programming. When I took a course in python programming, I found myself so fascinated that it altered my entire career. I became a scientific software developer. It was long and hard work to get from the level of 'Hello world' to the world of software development. The talk will cover how to embrace a non-IT education as a strength, how and why to atomize programming tasks and the importance of doing side projects. ### 1. Embrace your background Having domain specific knowledge from a field other than IT helps you to communicate with the team, the users and the group leader. It prevents misunderstandings and helps to define features better. A key step you can take is systematically apply the precise domain specific language to the code e.g when naming objects, methods or functions. Another is to describe the underlying scientific process step by step as a Use Case and write it down in pseudocode. ### 2. Atomisation Having a set of building block in your software helps to define responsibilities clearly. Smaller parts are easier to test, release and change. Modular design makes the software more flexible and avoids the Blob and Lava Flow Anti-Patterns. When using object oriented programming a rule of thumb is that an object (in Python also a method) does only one thing. You can express this Single Responsibility Principle as a short sentence for each module. Another practical action is to introduce Design Patterns that help to decouple data and its internal representation. As a result, your software becomes more flexible. ### 3. Participating in side projects Learning from others is a great opportunity to grow. Through side projects you gain a fresh perspective and learn about best practices in project management. You gain new ideas for improvement and become aware of difficulties in your own project. You can easily participate in a scientific project by adding a small feature, writing a test suite or provide a code review on a part of a program. Summarizing, in scientific software development using domain-specific knowledge, atomisation of software, and participation in side projects are three things that help to create high quality software and to continuously improve as a developer. The talk will address challenges in areas where science differs from the business world. It will present general solution one might use for software developed in a scientific environment for research projects rather then discussing particular scientific packages. ### Qualifications During my PhD I developed a software on 3D RNA modeling (www.genesilico.pl/moderna/) that resulted in 7 published articles. I am coauthor on a paper on bioinformatic software development. Currently I am actively developing a system biology software in Python at the Humboldt University Berlin (www.rxncon.org).

Watch

Ralph Heinkel - Combining the powerful worlds of Python and R